Understanding GPT: Everything You Need to Know

Advertisement

AI and other technology have transformed our lives and jobs. Generative Pre-trained Transformer (GPT) is a key emerging AI concept. GPT changed NLP. Created by OpenAI. It allows machines to speak, comprehend, and sound human. Many applications employ GPT to create content, promote productivity, or improve user experience. Why is GPT crucial to AI research? This blog will explain.

The meaning of GPT?

The strong Generative A pre-trained Transformer language model helps OpenAI create and utilize genuine language. Writing, summarizing, translating, asking questions, and more are GPT's main tasks. Deep learning modifications make the model write like a human. GPT examines a lot of literature to understand language and how to put it up. It offers logical solutions depending on your input. GPT is powerful because it always answers the same question. GPT answers jobs and chats with sensible, correct solutions.

In "pre-trained" mode, it reads newspapers, books, and websites. GPT learns English, writing, and global information in pre-training. The paradigm may be used for customer service, programming, or content after teaching.

GPT Building Plan

For GPT to operate, Transformer design is crucial. Vaswani et al.'s 2017 essay "Attention is All You Need" inspired transformers. RNNs and LSTM networks dominated NLP models before transformers. Sequential processing made training slower and made lengthy text strings difficult, but these models worked. Transformers can read longer lines of text and learn quicker since they can process fresh data simultaneously. Transformers' attention system, which helps the model concentrate on the most vital aspects of the content, is their most significant invention. The attention mechanism instructs the model to focus on certain sentence words while making predictions.

GPT guesses the next string word using a decoder-based transformer. This design considers preceding words. The model learns about text via many attention levels. More information helps the machine predict the next word and write logically.

Prepping for GPT

The GPT is very hard to understand and needs a lot of computer power and files. Before it starts training, the model reads and thinks about a huge amount of text from many sources to learn facts, language patterns, and structure. GPT figures out what the next word will be by looking at the words that came before it. It learns to understand and write text that sounds like it was written by a person as it gets more data.

Tagged data, such as text from books, papers, and websites, help the model learn how to guess the next word or phrase that is most likely to come next. This is called guided learning. This is done so that there is as little difference as possible between what the model says and what it finds in the text. A million times, this method is used to make models more accurate.

GPT can be changed to fit different goals after pre-training. Fine-tuning makes the model better at helping customers, making content, and writing code by training it on a smaller sample that is specific to the topic. When GPT is fine-tuned, it will be able to understand more complex terms and give better answers for use cases.

What GPT Can Do

We know GPT adapts. This model excels at many jobs that need it to interpret genuine language. GPT can outline, translate, answer questions, and more with texts. Examine each skill.

- Writing Text: GPT writes well from questions. GPT writes current, cohesive emails, tales, and art that demonstrate their knowledge. Businesses, marketers, and content creators employ it because it influences behavior.

- GPT is perfect for condensing research papers, journals, and reports since it swiftly summarizes enormous volumes of text and core concepts. GPT summarizes news items, research papers, and meeting notes.

- Change words across languages using GPT. GPT helps individuals communicate between languages by translating words correctly; however, it's not as comprehensive as other methods.

- GPT uses data effectively to answer questions. GPT answers crucial case-related questions that are true or tough. It's because it can answer difficult inquiries concerning figures, papers, and articles.

- GPT may also analyze data, produce software programming, assist people, and more.

Good values and problems

Despite its potential, GPT has moral difficulties. GPT must provide accurate, fair, and acceptable information. GPT learns information that everyone can see; therefore, it may deliver biased or harsh answers regarding sensitive topics. The model may be distorted after learning to handle bigotry, harsh language, and misinformation. On some datasets, OpenAI smooths the model and guides its replies via RLHF. This reduces unnecessary outputs. GPT and other AI models are biased and inaccurate, making them challenging to employ properly.

Misusing GPT is another concern. This model creates real-looking text. It may be used to deceive, propagate fake news, and fabricate information. OpenAI protects GPT usage via legislation. Any powerful instrument may be misused. Openness and responsibility in AI systems are similarly difficult. As more firms employ GPT, it's crucial to understand how these models make decisions and use them honestly.

GPT/AI: What Next?

GPT and AI will thrive as technology advances. GPT is being smartened by scientists to grasp words, learn new ideas, reason logically, and solve difficult issues. Because huge models need a lot of computing power, GPT should utilize less energy. Others want models that grasp how people feel, what's happening, and what they're saying.

Over the next several years, GPT will improve at understanding individual demands and come up with fresh concepts. These approaches might impact education, healthcare, business, and entertainment. GPT might customize learning, improve diagnosis, and help corporations make data-driven choices.

GPT will become more common as AI improves. Virtual assistants, customer service bots, and content-improvement technology will speed up wiser replies. Better AI raises new legal and moral issues that must be addressed.

Conclusion:

GPT is one of the greatest at comprehending speech because of its robust and adaptable AI. In addition to writing like a human, it can organize information, answer queries, and more. To utilize GPT safely and morally, consider ethical considerations like any other technology. As GPT improves, it will revolutionize how companies and consumers use AI. GPT has a bright future, but its expansion must be restrained to benefit everyone.

Advertisement

Related Articles

Get Quick Help to Solve Movavi Screen Capture Problems

JetBrains to Retire Aqua IDE Due to Low User Adoption and Feedback

Top 5 Benefits of Using Social Intents for Support

Which Is Better for Your Team in 2025: Asana or Monday?

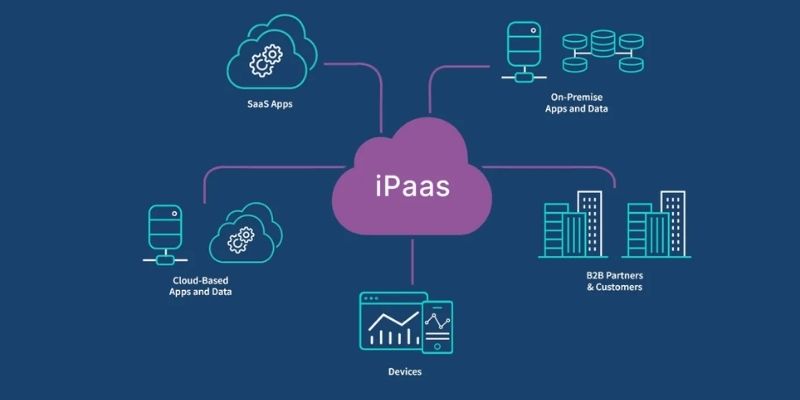

iPaaS Demystified: What Integration Platform as a Service Really Means

No-Code Development: What It Is and How to Start Building Without Code

How Can You Transfer Data from Samsung to iPhone Easily?

How to Change WhatsApp to Business Account Without Losing Data

Final Cut Pro X vs Adobe Premiere Pro: Features, Pros, and Cons Explained

Secure Your Communications: How to Send Encrypted Emails in Gmail

Simple Ways to Transform MXF Files to ASF for Better Playback

novityinfo

novityinfo